Interactive Audio

Interactive Audio

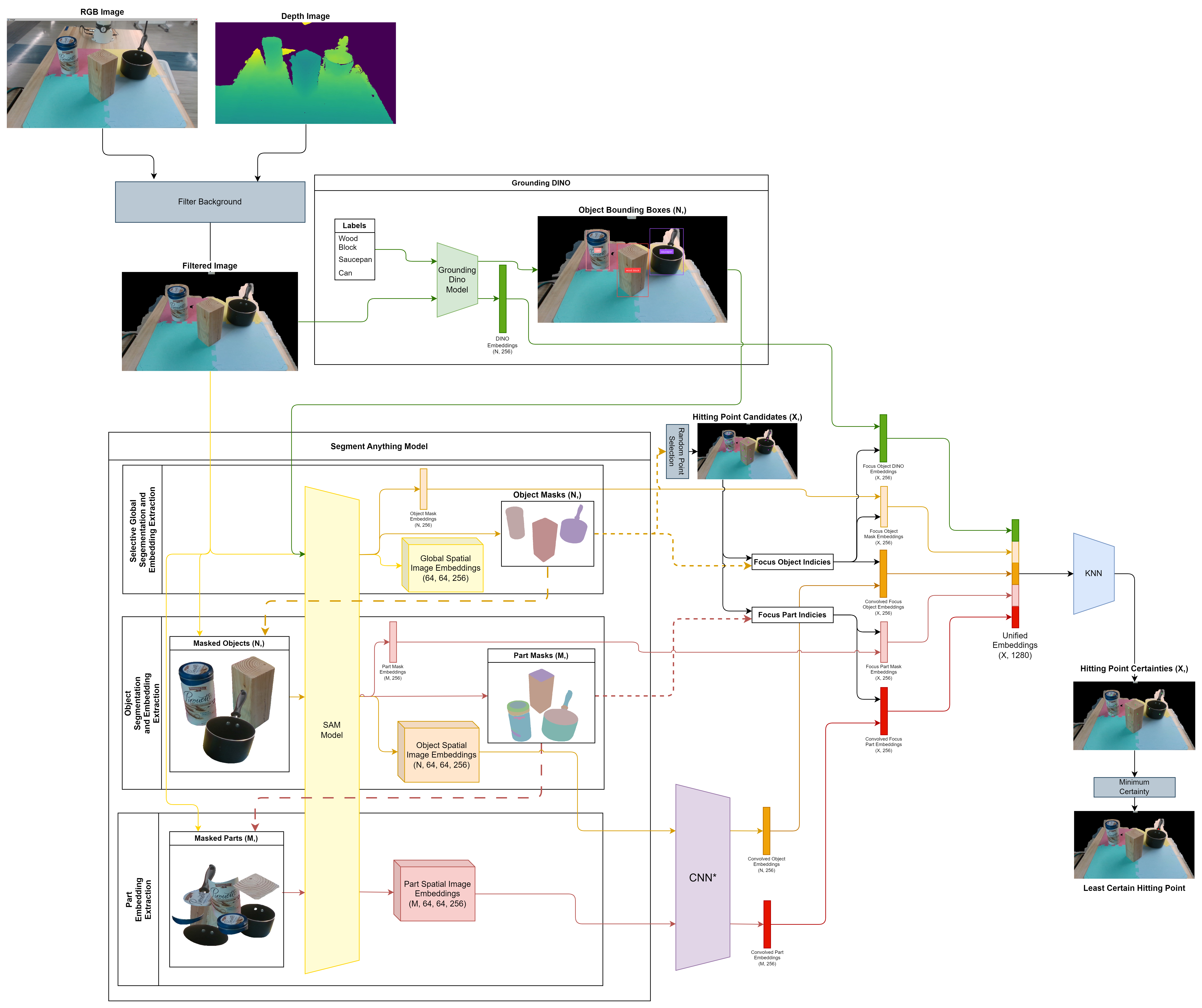

Interactive Audio is a project designed to advance the capabilities of robotic systems in identifying and classifying objects based on their material composition using both visual and audio cues. We utilized Grounded SAM for object detection and masking, while incorporating custom-designed tools to capture accurate audio signatures of objects. The ultimate goal is for the robot to differentiate objects not just by appearance but also by their unique acoustic properties when tapped.

Key Design Elements

Object Tapper Tool Design

One of my primary contributions to this project was the design of a specialized “object tapper,” a tool equipped with a directional microphone. This tapper provided a prime point of contact for obtaining clear audio signatures by tapping objects and recording their unique sound responses.

- Material Selection: After several iterations, we opted for flexible PLA plastic over earlier designs using rubber bands and elastics, which wore down quickly. PLA’s durability and flexibility made it ideal for delivering consistent, gentle taps without damaging the object or causing it to tip over. Unlike PETG, which has higher rigidity and less flexibility, PLA ensures the tool maintains the necessary elasticity over repeated uses, reducing the need for frequent replacements.

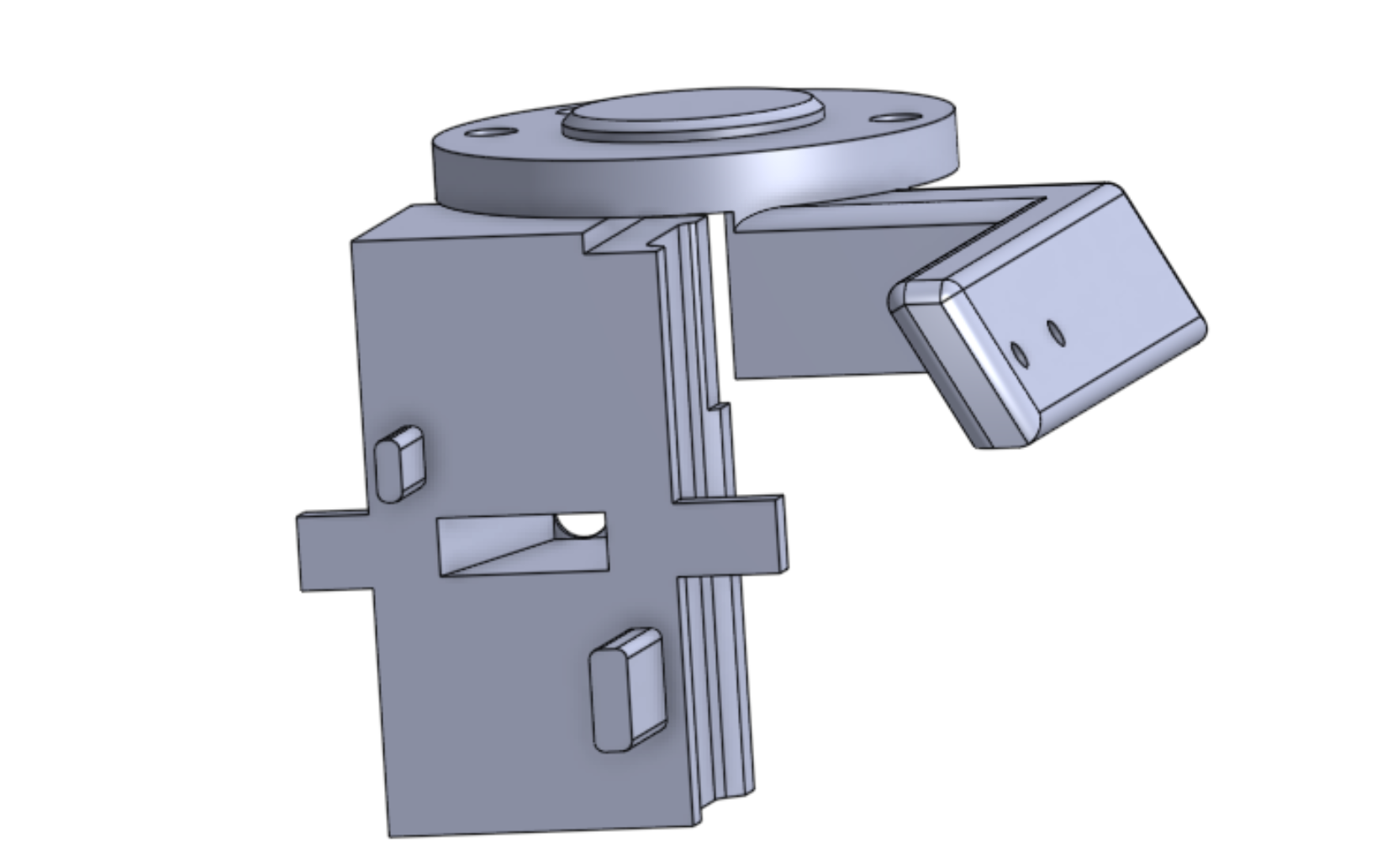

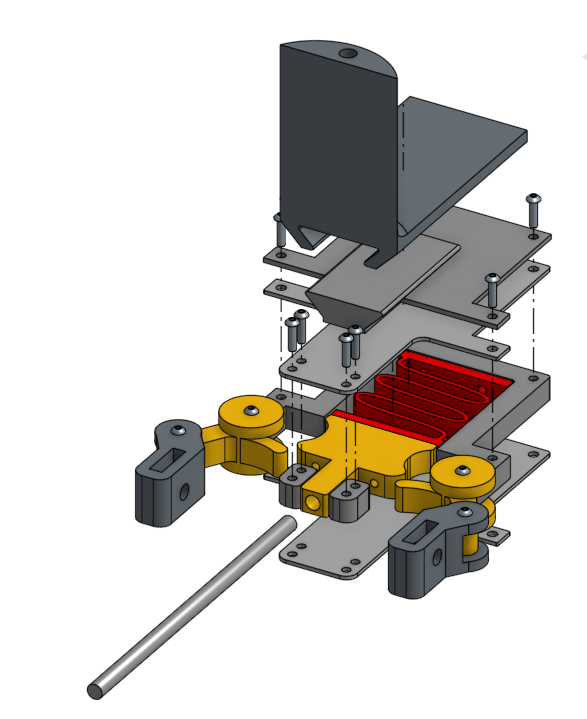

Figure 1: Initial CAD design without custom mounts relying on elastics wrapped around side hooks to prevent the “object tapper” from knocking over objects.

Original CAD Design with Custom Mounts

-

Design Consideration: The tool needed to ensure sufficient “give” to prevent objects from tipping over during taps while still allowing firm enough contact for effective sound capture. This required a balance of impact force and material flexibility. The design also considered the physics of impulse and momentum transfer, ensuring that the tap delivered just enough force without creating excessive vibrations that could skew the audio recording. Using principles from Newton’s Second Law (( F = ma )), the design optimized force application to ensure controlled taps.

-

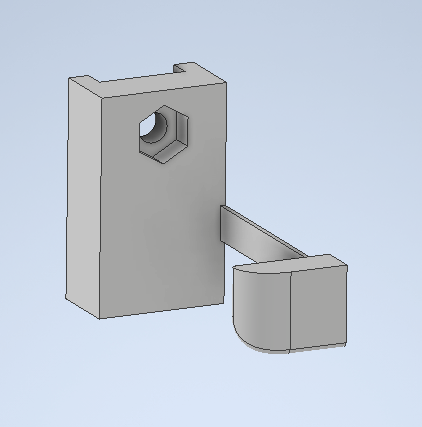

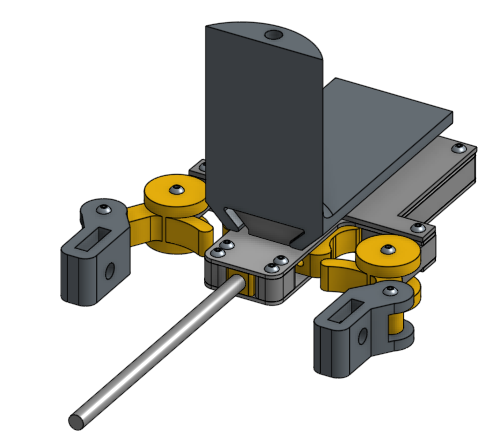

Challenges: Earlier versions that incorporated elastics often wore down due to repeated use, reducing both their elasticity and reliability. One can see that in the final design not only is there a “plastic tab” allowing for more consistent collision feedback, but there is also a custom mount designed to integrate comfortably with the franka emika panda allowing for smoother trajectory execution. Additionally there is a camera mount strategically placed to identify the object before contact (the camera should never collide with the object).Transitioning to PLA provided a more consistent tap mechanism, greatly improving the longevity and consistency of our recordings.

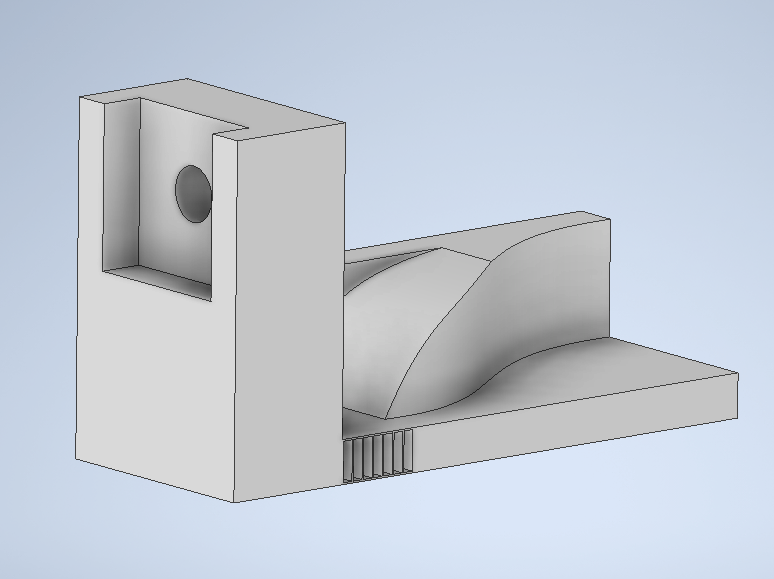

Figure 2: 3rd revised CAD final design with custom mounts and PLA tab Right-side to enable consistent contact and audio signatures, as well as locations to place mics and cameras

Figure 3: 3rd revised CAD final design with custom mounts and PLA tab Right-side to enable consistent contact and audio signatures, as well as locations to place mics and cameras.

Audio Sampling and Material Correlation

The directional microphone mounted on the tapper was used to gather clean and isolated audio samples of various objects. These audio signatures were then used to correlate sound to material composition, forming the foundation for material classification in the system.

Figure 4: Actual CAD in action during robot data collection

-

Physics Principles: The tapping mechanism was designed based on the concept of sound wave propagation and the relationship between material density and the speed of sound. By ensuring a uniform tapping force, the sound waves generated by each object were consistent, which helped in classifying materials based on their acoustic signatures.

-

Generative Ensemble Model: I helped develop a Generative Ensemble model that linked these audio recordings to specific material properties. The model used the distinct frequencies, amplitudes, and decay rates of each object’s sound to differentiate between materials such as wood, plastic, and metal. By integrating this into our system, the robot could classify objects more effectively by considering not only their appearance but also their sound profile.

Object Rendering and Sound Prediction

In addition to audio sampling, the project aimed to predict an object’s sound signature based on its visual appearance using object rendering techniques. This involved feeding visual data into our predictive model to forecast the acoustic properties of an object even before tapping it.

Figure 5: Object Renderer in action for soup spoon

-

Physics Integration: By applying principles of acoustic impedance and resonance frequency, the system could predict how different materials would respond to a tap based on their visual structure. This required understanding how sound waves behave when encountering materials of different densities and stiffness.

-

Computer Science Techniques: Using machine learning techniques, the model was trained to simulate the physical interaction between the object tapper and the materials, using visual cues like texture, shape, and material thickness to predict the object’s acoustic response. By combining physics-based models with visual data, we aimed to predict sound signatures without needing physical contact in all cases.

Final CAD Design with Custom Mounts

-

Overview: The Franka-mounted Spring-Loaded Object Tapper is designed to deliver gentle, repeatable taps that excite material resonances for audio classification while protecting the object, microphone, and camera. The end-effector converts a small gripper squeeze into a controlled out-of-plane tap using a compliant TPU spring element and a replaceable PLA striker tab, with the camera offset to maintain line-of-sight and avoid collisions.

TPU was selected for the spring due to its inherent damping (ζ≈0.1–0.2), fatigue resistance, and safe compliance, allowing precise tuning of the spring rate through geometry. PLA is used solely for the striker to provide a crisp, consistent impulse while maintaining repeatable contact geometry.

Figure 6a: Expanded view: Final tapper design

Figure 6b: Final tapper design

-

Dynamics of Tapper and Design: With an effective end-effector mass of ~0.05 kg and an approach velocity of 0.20 m/s, the resulting kinetic energy (~0.001 J) and a peak force target of 2–5 N guided a spring rate of ~2,500 N/m. A cantilevered TPU beam (15 mm length, 10 mm width, 5 mm thickness) achieves this target, with 1–2 mm preload improving repeatability.

The natural frequency of the system is ~36 Hz, and TPU damping suppresses multi-cycle ringing, capturing most of the impulse within the first 10–15 ms. This ensures a clean and measurable initial tap for audio classification without introducing spurious vibrations.

The PLA striker, with a 1.5–2.0 mm nose radius, concentrates pressure to excite higher frequencies while keeping stresses below PLA’s yield limit. The striker is field-replaceable to maintain impulse consistency over time.

-

Controls, Calibration, and Validation

The system operates with a 0.20 m/s approach, triggering a micro-squeeze to compress the spring 1–2 mm for a clean single impulse. Bench tests confirm spring compliance within ±0.3 kN/m. Impulse repeatability across multiple materials shows peak amplitude COV < 8% and spectral centroid shift < 5%. TPU springs survive over 10,000 cycles without significant drift, outperforming PLA alternatives.

In summary, the design achieves controlled, safe, and repeatable taps with low ring-down, combining first-principles spring tuning, compliant and damped materials, and precise CAD and FEA validation to ensure reliable performance for audio-based material classification.

Figure 7: Robot Arm tapper during sampling